Encrypted Inference with Moose and HuggingFace

deep learning

mpc

cryptography

The other day, I was very inspired by the blog post Sentiment Analysis on Encrypted Data with Homomorphic Encryption co-written by Zama and HuggingFace. Zama has created an excellent encrypted machine learning library, Concrete-ML, based on fully homomorphic encryption (FHE). Concrete-ML enables data scientists to easily turn their machine learning models into an homomorphic equivalent in order to perform inference on encrypted data. In the blog post, the authors demonstrate how you can easily perform sentiment analysis on encrypted data with this library. As you can imagine, sometimes you will need to perform sentiment analysis on text containing sensitive information. With FHE, the data always remains encrypted during computation, which enables data scientists to provide a machine learning service to a user while maintaining data confidentiality.

A General Purpose Deep Learning Architecture - Perceiver IO From Scratch

deep learning

code

from scratch

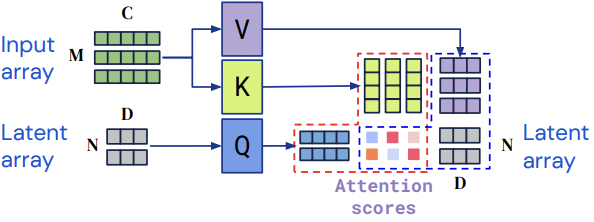

In recent years, we saw tremendous progress across all machine learning tasks from image classification, object detection, and translation, to question answering, text classification, and more. Main drivers of this progress are more compute resources, data, new training approaches such as transfer learning, and also more sophisticated neural network architectures. Fortunately these models are available at our fingertips. If you tackle a computer vision task, you can leverage the Pytorch Image Models (timm) library. As of now it contains more than 50 different types of architectures translating into 600 models which have different trade-offs in terms of accuracy and inference time, depending on the task. For NLP, you can use the popular transformer library from HuggingFace which offers almost 80k models based on about 140 different types of architectures.

No matching items